Meta And Microsoft Release Llama 2 Free For Commercial Use And Research

The Models or LLMs API can be used to easily connect to all popular LLMs such as Hugging Face or Replicate where all types of Llama 2 models are hosted The Prompts API implements the useful. Generative AI Amazon Bedrock Llama 2 Meta Llama 2 on Amazon Bedrock Quickly and easily build generative AI-powered experiences Get started with Llama 2 on Amazon Bedrock Benefits. Amazon Bedrock - not live yet cant find pricing unclear if itll have Llama 2 at launch. Special promotional pricing for Llama-2 and CodeLlama models CHat language and code models Model size price 1M tokens Up to 4B 01 41B - 8B 02 81B - 21B 03 211B - 41B 08 41B - 70B. Designed with OpenAI frameworks in mind this pre-configured AMI stands..

Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets Send me a message or upload an. For an example usage of how to integrate LlamaIndex with Llama 2 see here We also published a completed demo app showing how to use LlamaIndex to chat with Llama 2 about live data via the. . Choosing which model to use There are four variant Llama 2 models on Replicate each with their own strengths 70 billion parameter model fine-tuned on. In this post well build a Llama 2 chatbot in Python using Streamlit for the frontend while the LLM backend is handled through API calls to the Llama 2 model hosted on..

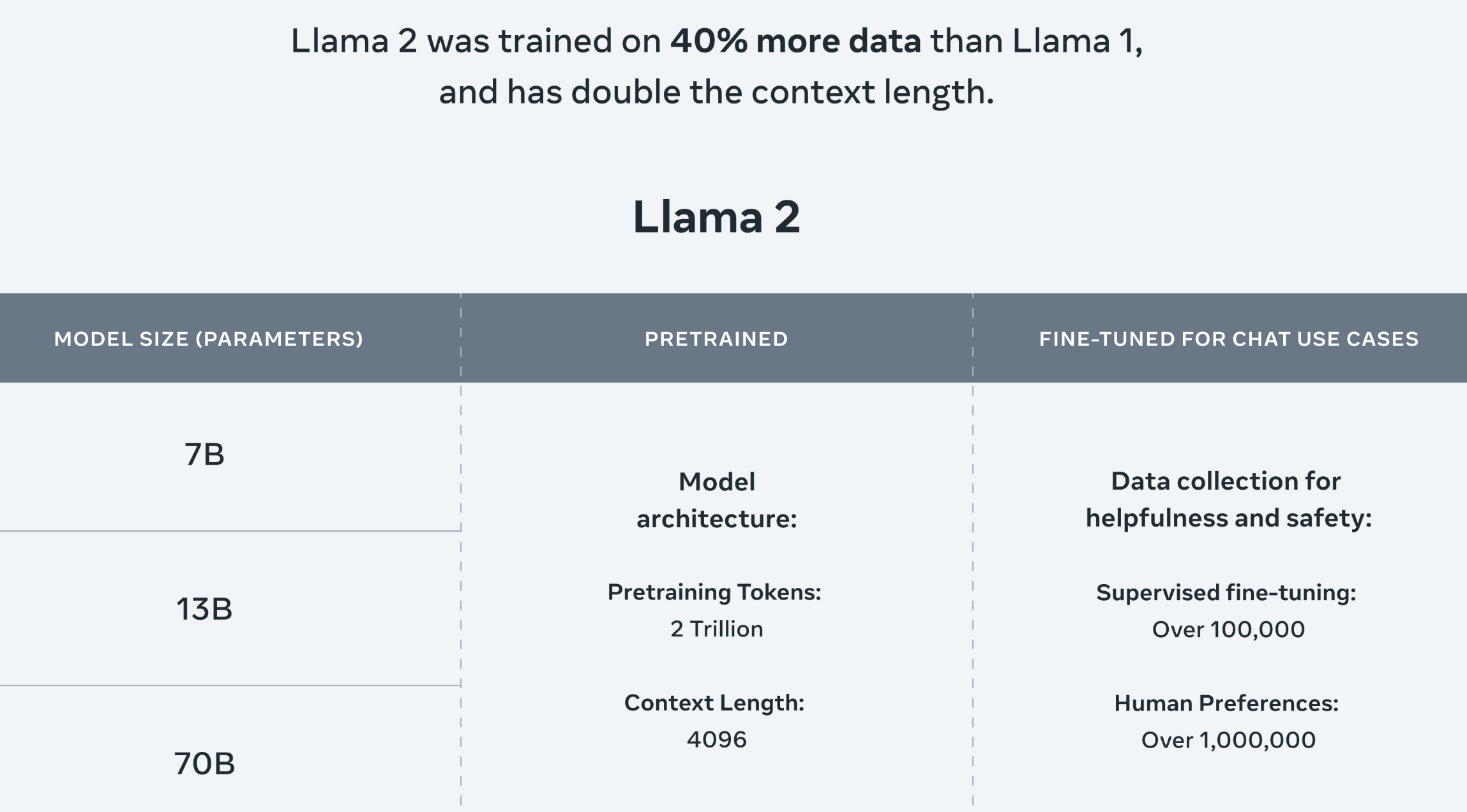

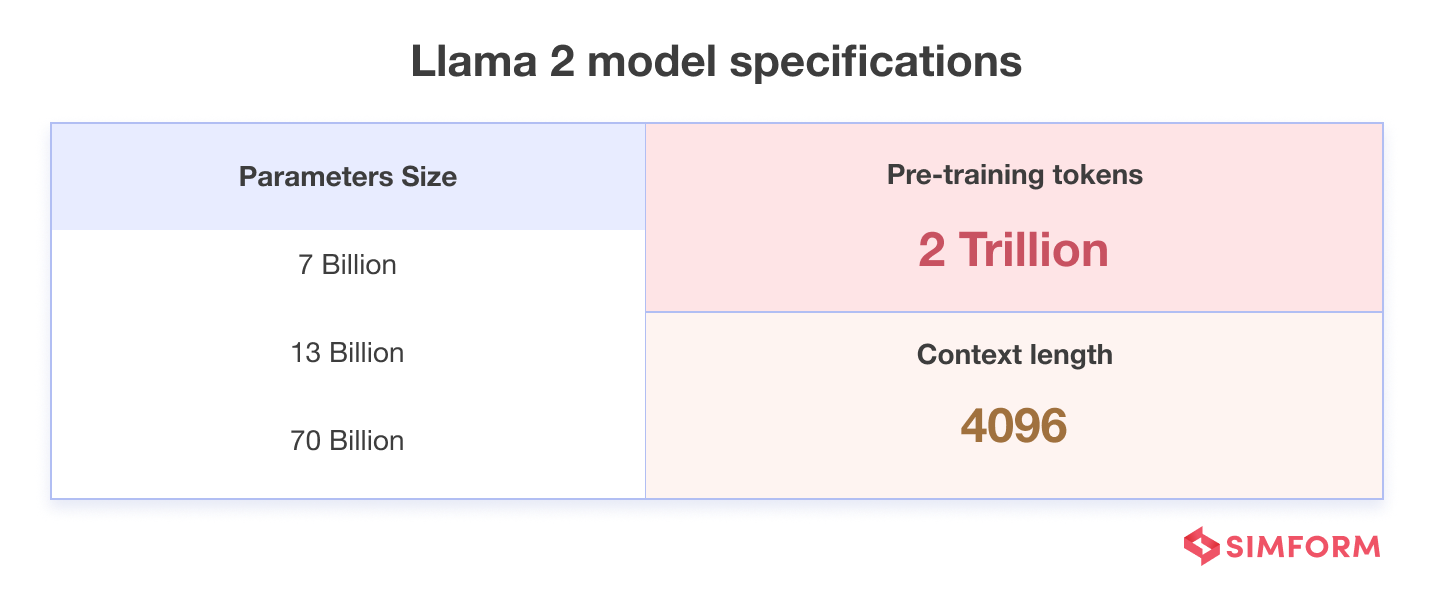

All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have. Variations Llama 2 comes in a range of parameter sizes 7B 13B and 70B as well as pretrained and fine-tuned. Llama 2 The next generation of our open source large language model available for free for research and. The Llama2 7B model on huggingface meta-llamaLlama-2-7b has a pytorch pth file. Llama-2-Chat models outperform open-source chat models on most benchmarks we tested and in our human. It comes in a range of parameter sizes7 billion 13 billion and 70 billionas well as pre-trained and. Llama 213Bs fine-tuning takes longer than Llama 27B owing to its relatively larger model size..

Discover how to run Llama 2 an advanced large language model on your own machine With up to 70B parameters and 4k token context length its free and open-source for research. The Models or LLMs API can be used to easily connect to all popular LLMs such as Hugging Face or Replicate where all types of Llama 2 models are hosted The Prompts API implements the useful. The main benefits of running LlaMA 2 locally are full control over your data and conversations as well as no usage limits You can chat with your bot as much as you want and. Python ai This page describes how to interact with the Llama 2 large language model LLM locally using Python without requiring internet registration or API keys. In this blog post well cover three open-source tools you can use to run Llama 2 on your own devices Llamacpp MacWindowsLinux Ollama Mac MLC LLM iOSAndroid..

Comments